CRO and link testing sequence: a rollout order that works

CRO and link testing sequence: a practical rollout order that helps you measure backlink impact while you change layout, copy, and conversion paths.

Why CRO changes can hide link impact

Link tests can look random when you change your site at the same time. A new backlink might be helping, but a layout tweak can change where people click. A copy update can shift intent. A new checkout step can add friction. When those things happen together, it becomes hard to say what actually caused the result.

This is masking: one change covers up the signal from another. In practice, it happens because links affect discovery and trust over time, while CRO changes can move conversion behavior immediately.

Here are a few common patterns:

- Traffic rises, but conversions drop because the main offer is harder to find after a redesign.

- Rankings improve for a few queries, but organic sessions stay flat because internal links were removed.

- Conversion rate jumps, but you can't tell if it was the new hero copy or the new link placements because both shipped in the same week.

- You see a spike and then a crash because tracking broke during a template update, not because the links stopped working.

The fix is simple: change fewer things at once, and change them in a planned order. If you adjust the conversion path (forms, pricing page structure, checkout steps) while also adding backlinks, you're mixing two different signals. One is on-page behavior. The other is off-page authority and crawl discovery. When both move at once, attribution turns into guesswork.

Sometimes the right move is to pause testing. Hold link tests and CRO experiments during a major rebuild, migration, or template overhaul. Also pause if analytics events are unreliable, attribution settings keep changing, or key pages are missing basic tracking. Even high-quality backlinks can look ineffective if the site is changing too quickly to measure cleanly.

If you want link impact to be readable, stabilize the page experience first. Then add links and watch what moves.

Set your goal and what must stay stable

Before you change anything, decide what you're trying to learn. Are you measuring the impact of new backlinks, trying to lift conversion rate, or doing both? You can do both, but only if you plan the sequence so results stay readable.

Start with one primary KPI:

- Link impact: organic sessions or organic conversions to the tested pages.

- CRO impact: conversion rate for a specific step (lead form submit, checkout completion, booked demo).

Then add a few supporting metrics so you can spot false wins or hidden damage. For most sites, a simple set is enough: organic sessions, rankings for a small keyword group, click-through rate, and revenue or lead quality.

Next, define your unit of testing. Link tests are easiest to read when the unit is one page or a small cluster of closely related pages. CRO tests are often cleaner when the unit is a single template or one funnel step.

During a link test, keep the conversion path stable. If you change layout, copy, form fields, pricing, and tracking while also adding links, you won't know what's driving the movement.

Write down what must not change while links are being evaluated:

- Offer and pricing

- Funnel steps (pages, form fields, checkout flow)

- Primary page layout and main CTA placement

- Tracking setup and conversion definitions

- Major internal linking and navigation labels

Keep a lightweight change log. One line per change is enough: what changed, when it went live, and why. Log new link placements the same way you would log a copy test. When results move, your log tells you the story.

Understand timing: SEO signals vs conversion signals

SEO and CRO run on different clocks. CRO changes can affect behavior the same day. Link effects usually arrive in stages, and the early stages are easy to mistake for nothing happening.

When you add backlinks, the first effect usually isn't more sales. It's search engines noticing the links and reassessing your pages. A common sequence looks like this:

- Crawl and discovery: bots find the new link and follow it

- Reprocessing: your page is rechecked and signals are updated

- Impressions move first: you show up more often (or for different queries)

- Clicks follow: traffic rises after positions settle

- Conversions come last: they depend on what that new traffic does on your site

CRO changes show up faster because they act on existing traffic. If you rewrite a headline, move a form, or change a checkout step, conversion rate can shift within days once you have enough visitors.

"Time to signal" is the delay between a change and the first indicator you can trust. For CRO, that's usually conversion rate. For SEO, early trustworthy signals are often impressions and average position, not revenue.

Don't judge link impact based on week-one conversions alone. If links are working, you might see more impressions with flat conversions at first, especially if you're starting to appear for broader searches.

Typical time windows that are safer than "a few days":

- CRO-only changes: 1-2 weeks (or until you have a solid sample size)

- Link-only changes: 4-8 weeks for direction, 8-12 weeks for confidence

- Both together: longer, unless you keep one side stable while the other runs

Baseline first: what to measure before any rollout

A clean baseline keeps results readable because you start from a stable, well-measured point.

Pick a baseline window of 2 to 4 weeks. During that time, freeze major changes: no new templates, no navigation redesign, no pricing page rewrite, and no big internal linking overhaul. Small fixes are fine, but log them.

Before you trust any chart, confirm tracking works end to end. A real person should be able to land on a page, take the main path (signup, purchase, lead form, demo request), and the conversion should show up correctly. Also check for double-counting, and confirm your consent setup isn't silently breaking forms.

Make sure key pages can be found. Check that important pages are indexable (not blocked by robots rules, accidental noindex tags, or incorrect canonicals). If a page isn't eligible to rank, new backlinks or better copy can't show their full effect.

Capture a "before" snapshot. At minimum, record:

- Rankings for your main queries and a few nearby queries

- Search impressions and clicks to the pages you plan to test

- Conversion rate and conversion volume for the same pages

- Traffic split by channel (organic vs paid vs direct)

- Notes on obvious seasonality (promo weeks, holidays, email blasts)

Finally, segment your baseline by page type. Landing pages often react quickly in conversion rate, while blog posts and product pages may show slower SEO movement. If you're pointing backlinks at a product page, track product-page metrics separately from blog metrics. Otherwise, one can hide the other.

Step-by-step rollout order that keeps results readable

If you want clean attribution, use a predictable order. Change one big lever at a time, and keep everything else steady long enough to see its signal.

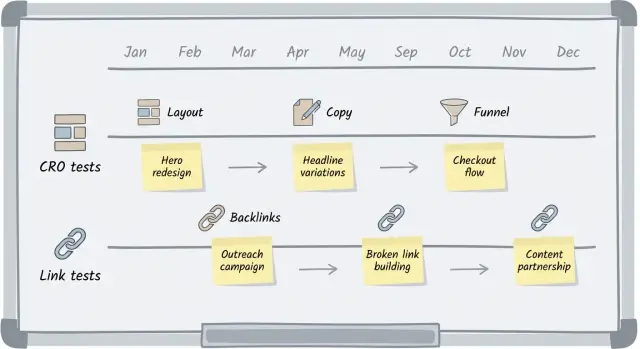

A rollout order that reduces masking

-

Lock the conversion path first. Make sure forms, checkout, lead routing, confirmation pages, and tracking events are stable. If these move while links are being added, a conversion dip might be a broken form, not bad traffic.

-

Make small CRO tweaks that don't change the topic. Early CRO work should be low-risk: button text, spacing, field labels, trust cues, and clarity around the next step. Topic shifts can change which queries you rank for and muddy link impact measurement.

-

Launch link acquisition to a fixed set of target pages. Pick the pages you will support and don't swap targets mid-flight. Keep the on-page story mostly consistent while you measure.

-

Wait through the SEO signal window, then evaluate with your change log. Avoid major edits on target pages during this window. Review rankings, organic clicks, and conversions together. "Rankings up, conversions flat" can mean better visibility but a weaker intent match.

-

Only then run bigger CRO experiments. Save major changes for after link impact is clear: new hero message, new offer, a new page structure, or a new funnel step. Big changes can help, but they also reset your baseline.

A practical freeze rule

While links are being built, set clear freeze rules for target pages: no new templates, no navigation changes, no URL moves, and no rewriting the main headline. If edits must happen, batch them and record them.

How to pick pages for link tests without creating noise

The page you choose matters as much as the links. If the page is already in flux, you won't know whether movement came from backlinks or your own edits.

Start with stable intent. Pick a page that matches one main search need and stays consistent from headline to CTA. If a page tries to serve three audiences, small ranking or conversion changes can just be traffic mix shifting.

Avoid pages you plan to rewrite heavily in the next 4 to 8 weeks. That includes major copy refreshes, layout rebuilds, pricing changes, new navigation, and switching the primary conversion path.

Limit scope. Choose 1 to 3 primary target pages for link testing, not your entire site. It's easier to watch what changes, and it reduces the odds that unrelated internal changes blur the story.

Traits that usually make link tests easier to read:

- Steady traffic over the last few weeks (no big spikes from campaigns)

- Clear query match (the page already ranks for related terms)

- One main conversion action

- Few planned design or copy changes during the measurement window

- Simple internal linking you can keep stable

Next, decide where links should land: a hub page or a money page.

A hub page (guide, category, comparison) can spread value through internal links and often matches informational searches better. The downside is that conversion lift may be indirect and slower. A money page (product, pricing, demo) can show clearer conversion impact, but it's more sensitive to CRO tweaks and can be harder to rank if intent is mismatched.

Whichever you choose, keep internal linking stable while you measure. Don't change menus, breadcrumbs, sidebars, or "related pages" blocks during the link window. Even small internal link shifts can change crawl paths and rankings, which makes it easy to over-credit (or under-credit) external links.

Example scenario: links plus a homepage copy refresh

Imagine a SaaS company planning a homepage refresh while also adding new backlinks that point to a high-intent feature page (for example, "Team Permissions"). The goal is more qualified signups.

What often happens is messier. The team ships a new homepage headline, rearranges navigation, changes the main CTA, and launches backlinks in the same week. A week later, conversions move, rankings wobble, and nobody can say what caused what.

The homepage rewrite can change who clicks into the site and where they go next. A nav change can redirect traffic into a different path. A CTA change can raise or lower trial starts even if traffic quality stayed the same. Meanwhile, backlinks may take weeks to show their full effect in search, and the impact may be page-specific rather than site-wide.

A cleaner plan separates funnel changes from traffic changes:

- Week 0-2: Lock the funnel. Keep headline, nav, and primary CTA steady. Fix tracking. Record baseline for the feature page and signup flow.

- Week 3-6: Add backlinks to the feature page, but keep the homepage unchanged.

- Week 7-10: After the link phase shows a clear trend, test the new homepage copy and layout. If you A/B test, keep destination and CTA consistent across variants.

Track both search and conversion signals, because they move on different timelines. Watch impressions and average position for the feature page, plus a split of branded vs non-branded queries. Then track conversion rate for sessions that land on that feature page (not just site-wide).

Keep an "unexpected events" log next to your numbers: promo launches, PR mentions, pricing changes, seasonality spikes, paid campaign start/stop, even major competitor moves. One line per event is enough.

Common mistakes that break attribution

Attribution fails when you change multiple things that can move results in opposite directions. The goal isn't perfect math. It's a readable story: what changed, when, and where.

Touching your offer mid-test is one of the fastest ways to ruin a link measurement window. Pricing changes, plan changes, discounts, and shipping thresholds can overpower most SEO effects.

Template-wide redesigns cause the same problem at a larger scale. A new header, navigation, page speed shift, or different internal linking pattern across the site can change both organic traffic and on-page behavior.

URL moves are another quiet attribution killer. Even with redirects, clarity drops if URLs are renamed, parameters change, canonicals shift, or redirects are inconsistent. Search engines need time to settle, analytics can split the page into multiple rows, and before/after comparisons get messy.

Five patterns that commonly invalidate results:

- Pricing or the core offer changes during the measurement window

- A sitewide template refresh rolls out while you expect to credit backlinks

- URLs are renamed or moved without clean redirects and clear notes on the exact date

- Too many pages are tested at once, with no stable control set

- You stop early after one strong week (or panic after a weak one)

Example: you point new backlinks to a category page, but that same week you rewrite the headline and change the primary CTA from "Start free" to "Book a demo." If sign-ups fall, you might blame the links. The conversion-path change could be the main driver.

Quick checklist before you blame (or credit) the links

When rankings or conversions move after a link push, the tempting story is simple: the links worked, or the links did nothing. Do a quick sanity check first.

Answer yes or no:

- Have we changed the page topic, title tag, headings, or URL in the last 30 days?

- Did the conversion path change (steps, fields, checkout, calendar, routing)?

- Did we launch, pause, or scale paid campaigns that could lift brand searches and direct traffic?

- Did site speed drop, downtime happen, or did indexing issues appear (unexpected noindex, canonical changes)?

- Are we comparing the same pages and the same query set over time?

If you answered yes to any of them, don't throw out the test. Adjust your interpretation:

- If topic/title/URL changed, restart the SEO measurement window from the change date.

- If the conversion path changed, judge links mainly on SEO signals until the funnel settles.

- If paid campaigns changed, separate brand vs non-brand queries and compare them separately.

- If speed or indexing had issues, fix that first and annotate the dates.

- If pages or queries changed, lock the cohort: same URLs, same tracked queries, same time ranges.

Next steps: build a repeatable testing rhythm

A clean sequence only helps if you can repeat it.

Pick a cadence you can stick to. Weekly is for monitoring and catching problems early. Monthly is for decisions, because it gives rankings and traffic time to move.

A simple rhythm:

- Weekly check-in: confirm tracking, note site changes, scan for anomalies

- Monthly decision point: review performance vs baseline, decide what to do next, document why

- Quarterly cleanup: retire old tests, consolidate learnings, refresh your page list

Before you launch anything, set pre-committed rules for keep, pause, scale, or reset. They don't need to be perfect, just consistent.

If you need backlinks you can plan around, treat links like a controlled input: predictable timing, known pages, and known domains. For teams that want that kind of consistency, services like SEOBoosty (seoboosty.com) focus on securing placements from a curated inventory, which can make it easier to keep the rest of the test stable.

Next step: pick 1-3 target pages that matter, freeze major CRO changes on those pages for the measurement window, and run one clean link test cycle end to end. Keep a one-page log of what changed, when it changed, and what you decided.

FAQ

What does “masking” mean in link testing?

Masking is when two changes happen close together and one hides the signal of the other. Backlinks usually influence discovery and rankings over weeks, while CRO edits can change conversion behavior immediately, so the faster change can make the slower one look ineffective.

Why did traffic go up after new backlinks, but conversions went down?

Treat it as a measurement problem first, not a performance problem. Check whether you changed layout, CTAs, forms, pricing, internal links, or tracking during the same window, because any of those can lower conversion rate even while SEO visibility improves.

What’s the safest order to run CRO work and backlink work?

Freeze the conversion path and tracking, then run the link test on a fixed set of pages for long enough to see SEO signals settle. After you can see a clear direction from the link phase, run larger CRO experiments so you’re not mixing causes.

Which metrics should I use to measure backlink impact versus CRO impact?

For backlink impact, start with organic impressions, average position, and organic clicks to the target page(s), then look at organic conversions after that. For CRO impact, focus on conversion rate for the specific step you changed, using the same traffic segment and timeframe.

How long should I wait before judging whether new backlinks worked?

Usually 4–8 weeks is enough to see direction on rankings and clicks, and 8–12 weeks is safer for confidence, especially if your site isn’t crawled frequently. If you changed titles, URLs, templates, or internal linking during that period, restart the clock from the last major change.

How do I choose the right pages for a clean link test?

Pick one page or a small cluster that targets the same intent and is unlikely to be rewritten soon. Avoid pages with upcoming redesigns, pricing edits, URL moves, or major internal linking changes, because those create noise that makes attribution guesswork.

What should I include in a change log during testing?

Log what changed, when it went live, and why, including CRO edits, tracking tweaks, template releases, and new link placements. When you see a spike or drop, the log helps you separate “SEO moved” from “the funnel changed” or “tracking broke.”

How can I tell if tracking issues are causing false link test results?

Run a real end-to-end test: land on the page, complete the main action, and confirm the conversion records correctly in your analytics. Also watch for doubled events, broken consent flows, missing parameters, or split reporting caused by URL variants, because those issues can mimic a conversion drop.

What changes most often ruin attribution during a link test?

Big moves like pricing changes, new funnel steps, navigation changes, URL renames, template-wide redesigns, or internal link reshuffles can overwhelm or distort SEO effects. If you must do them, batch changes, document exact dates, and avoid judging link performance until the site is stable again.

How can a service like SEOBoosty help keep link tests more measurable?

If you want predictable timing and known placements, using a service that provides curated, pre-arranged placements can make planning easier because you’re not waiting on uncertain outreach timelines. The key is still to keep target pages and the conversion path stable during the measurement window so the link signal is readable.